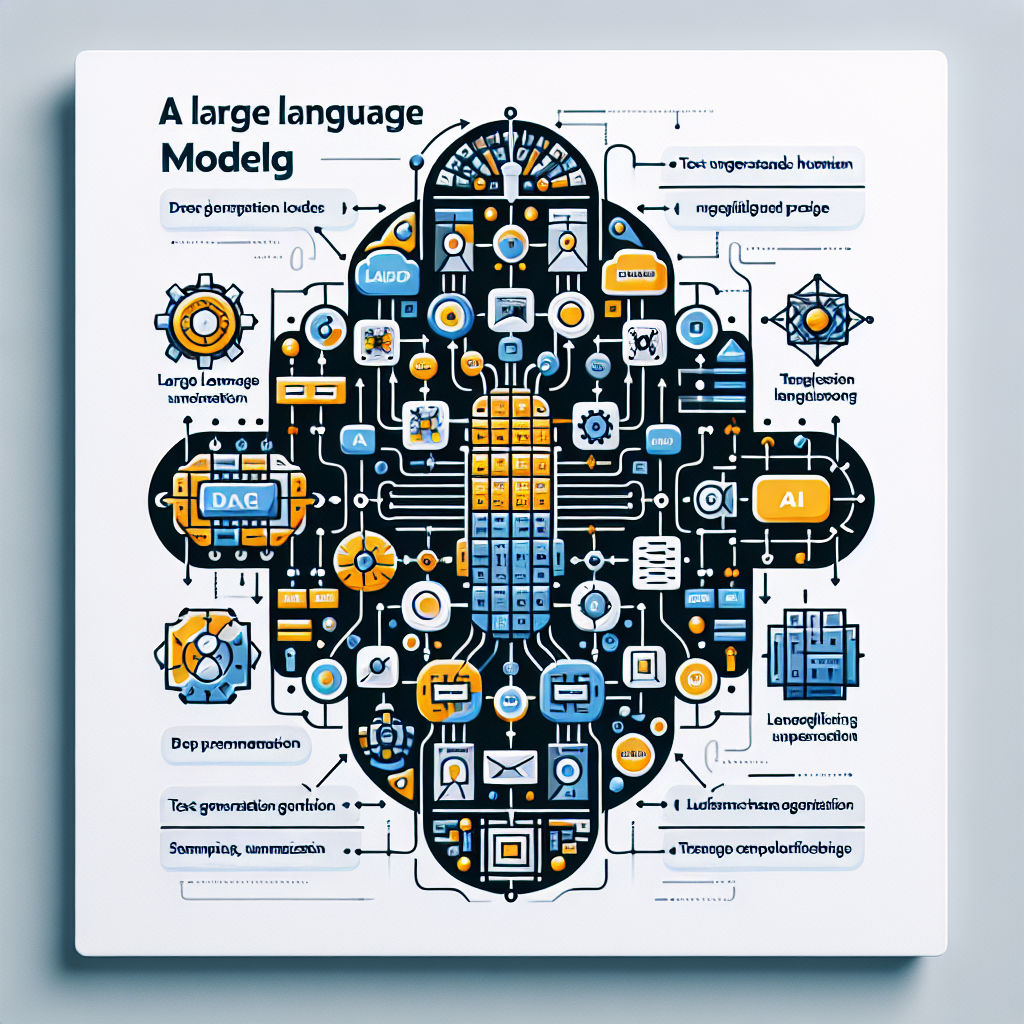

What is a Large Language Model?

The language model describes A specific type of advanced AI system that can understand and generate human language. It uses deep learning algorithms, especially neural networks to process text and discover similarities between worlds that allow the AI to understand the context, syntax, and semantics of a language. This correspondence is what allows LLMs to work on a myriad of tasks including text generation, summarization (long-form or short), translation, and question-answering.

As LLMs gain popularity as next-gen language processing tools, a large number of industrial and AI/ML enthusiasts have changed their focus toward the rise in development use-case. More organizations are looking to leverage LLMs for better customer engagement, more automated content production, and smarter data analytics. This has famously allowed OpenAI’s GPT models to produce entire web pages of coherent, contextually valid text.

Key Components of Large Language Models

Understanding Transformers

Transformers are the backbone of modern LLMs. A mechanism called self-attention is used by transformers, first introduced in the paper Attention is All You Need to provide a way for the model to weigh how important different words in the sentence are when generating an output. This architecture gives the model a lot more understanding of context and how words relate to each other, this results in output that is much easier for humans or machines who can use language models(flowchart, etc) to ingest.

Thanks to transformers, we can finally train models in a parallel fashion which makes training faster and more efficient. This architecture, known as transformer architecture, has become the de facto standard for constructing large LLM and it makes it the job of researchers or developers to come up with a robust language model.

SelfSupervised Learning and Language Understanding

Self-supervised learning is a very important method in training LLMs. Supervised learning in comparison is dependent on annotated datasets, while self-supervision inherently learns from unannotated data by predicting parts of the input themselves. So, we want to train a model such that it can guess the next word of a sentence given its earlier words… So the process does not need to get intelligence from data manually by any human annotations.

This is especially useful when training models from scratch as it does not require a big dataset of labeled images, but at the same time facilitates learning. With the billions and even trillions of words circulating in our systems, LLMs can learn with a depth that is often improbable.

Dataset Requirements for LLM Training

Datasets that should be of high quality and diversity are necessary for training large language models. For LLMs to be effective learners, they need hundreds of gigabytes worth of text data. This data should be multilingual considering different audiences and topics, allowing the model to provide coherent responses that are relevant in each context.

A more comprehensive model that can grasp various linguistic and cultural references is developed by leveraging a diverse set of data from different sources (books, papers, websites, social media, etc). This is when data preprocessing becomes important, to clear up noise and maintain the integrity of your training dataset.

Steps to Build a Large Language Model from Scratch

Preparing for Model Training

Before beginning the build of an LLM it is crucial to establish clear use cases and goals for a project. Knowing up front how you intend to use the model will restrict your architecture, dataset pruning, and training strategies. Gathering your initial resources can be crucial when it comes to training, you need the hardware (and earn a cool badge…), software, and datasets all set up for your training to go smoothly.

The importance of choosing an appropriate dataset is immeasurable. This process includes selecting just the right data, and not too much nor 2 easily identifiable to label accurately. As better the quality of datasets, the higher is accuracy and finer trained model making this step worth the time invested during development.

Selecting the Right Model Architecture

One of the critical steps in LLM development is selecting a suitable model architecture. There are many architectures to choose from for LLMs, but transformer models have become the de facto backbone on which all these choices leverage. On one hand, some systems may be optimized for speed and efficiency over fidelity or elegance of a sentence (e.g. the transition between words might not capture more nuanced forms in intricate English prose).

These two architectural styles come with their own advantages as well as disadvantages. The best bookstore programming requires structure depending on how the specific requirements of your app add up.

Data Preprocessing for Effective Training

Data Preprocessing refers to the process of preparing input data, cleaning it up, normalizing or tokenizing, etc before feeding it into the model. This step is very important to maintain data consistency and relevance which will directly affect the model outcome. This will provide cleaner datasets by eliminating duplicates, correcting errors,, and standardizing text formats.

LLM specification is highly dependent on text tokenization, i.e., the process of converting input into tokens (such as words or subwords). It enables the model to accurately interpret and comprehend language in a tokenized form.

Training the Model from Scratch

The process of training an LLM can be broken down into a number of phases, including initial parameter setting and iterative training cycles. In this step, the model learns how to detect patterns from training data and adjust its parameters to decrease error.

Robust tools, hardware, and resources for effective training. High-performance GPUs are a must for large-scale models with the gargantuan computation they need to train. LLMs can be created and trained using the infrastructure offered by frameworks like TensorFlow, and PyTorch.

Reinforcement Learning in LLMs

This paper studies how reinforcement learning can greatly improve the capabilities of the heavily pre-trained language model. For this, they train their models to make choices by the response from their surrounding world and as such learn from successes or failures. Integrating reinforcement learning allows LLMs to capture context and nuance between all the NLP problems, which then leads to a more refined language generation.

This includes using reinforcement learning to enable LLMs to change their responses in light of feedback from users, providing more accurate and meaningful interactions.

Training Large Language Models (LLMs)

Assembling large language models is riddled with unique challenges and must be adopted carefully. The (scalable) process of training a Large Language Model is quite different from the type you normally use10x, in terms of data scale and architecture complexity, and most importantly computational resources.

The most prevalent complexity is dealing with the tremendous quantity of data required for training. Whereas smaller models can learn effectively from small subsets of data, LLMs need large and wide-ranging text corpora to reach top-notch performance. Further, the training process itself could consume a ton of resources and would require high-end hardware and efficient training strategies.

Common LLM Training Techniques

Supervised vs. SelfSupervised Learning in LLMs

While in the LLM training world, supervised and self-supervised learning approaches provide distinctive benefits. Although effective, supervised learning requires labeled datasets which can be difficult to obtain and often time-consuming or expensive to create. This is opposed to self-supervised learning that uses unlabelled data, allowing LLMs to learn from large amounts of text without being extensively human-led. Therefore, self-supervised learning becomes the primary method of LLM training as it enables models to learn about language from a variety of data sources.

FineTuning with Prompt Engineering

So, fine-tuning LLMs for specific applications is a really important method and prompt engineering. It requires writing input prompts to direct the model in how it generates a response. Developers can refine the specificity and applicability of outputs by adjusting the prompts, and customizing how they constrain model behavior across different tasks.

As a generative model, specific wording or context to prompt an LLM response that is informative could be of use for developers attempting to fine-tune their LLMs.

Using Generative AI for Continuous Improvement

Using generative AI techniques, one can augment LLM functionalities by making it capable of continuous learning and adapting. Such methods empower LLMs to create helpful data and insights derived from their training, which in turn helps them learn over time.

Integrating generative AI into LLMs, real-world applications such as building chatbots learning from user interactions or tools that auto-generate content based on emerging trends prove the efficiency that actively using generators can have. This flexible approach helps businesses keep up with the fourth industrial revolution, and create user experiences that are more meaningful and dynamically engaging.

Tools and Resources for LLM Training

Essential Tools for Building and Training LLMs

Enterprise Perspective-There are some toolkits and frameworks that have emerged in importance for building as well as training these large language models. These Python libraries are highly popular and come with extensive functionality to support the implementation of deep learning algorithms (and architectures) in either TensorFlow or PyTorch. Furthermore, libraries such as Hugging Face have pre-trained models and make it easy to work with transformer architectures which greatly smooths the development path for beginners in LLMs

This allows researchers and developers to take advantage of the state-of-the-art new techniques without having to start from scratch, reducing LLM development time dramatically.

Hardware and Cloud Resources for Large Language Model Training

However, training the hardware needs for these large language models can be quite significant. GPUs are crucial for performing the enormous computations associated with bioinformatics pipelines since they allow limitless data processing.

Organizations are relying more and more on the cloud to expand their training footprints with elastic compute resources that can address the sometimes sporadic needs of LLM inference tasks in particular.

The easiest way to get ready-to-use LLM training process deployment and scaling is through specialized machine learning environments provided by cloud services such as AWS, Google Cloud, or Microsoft Azure.

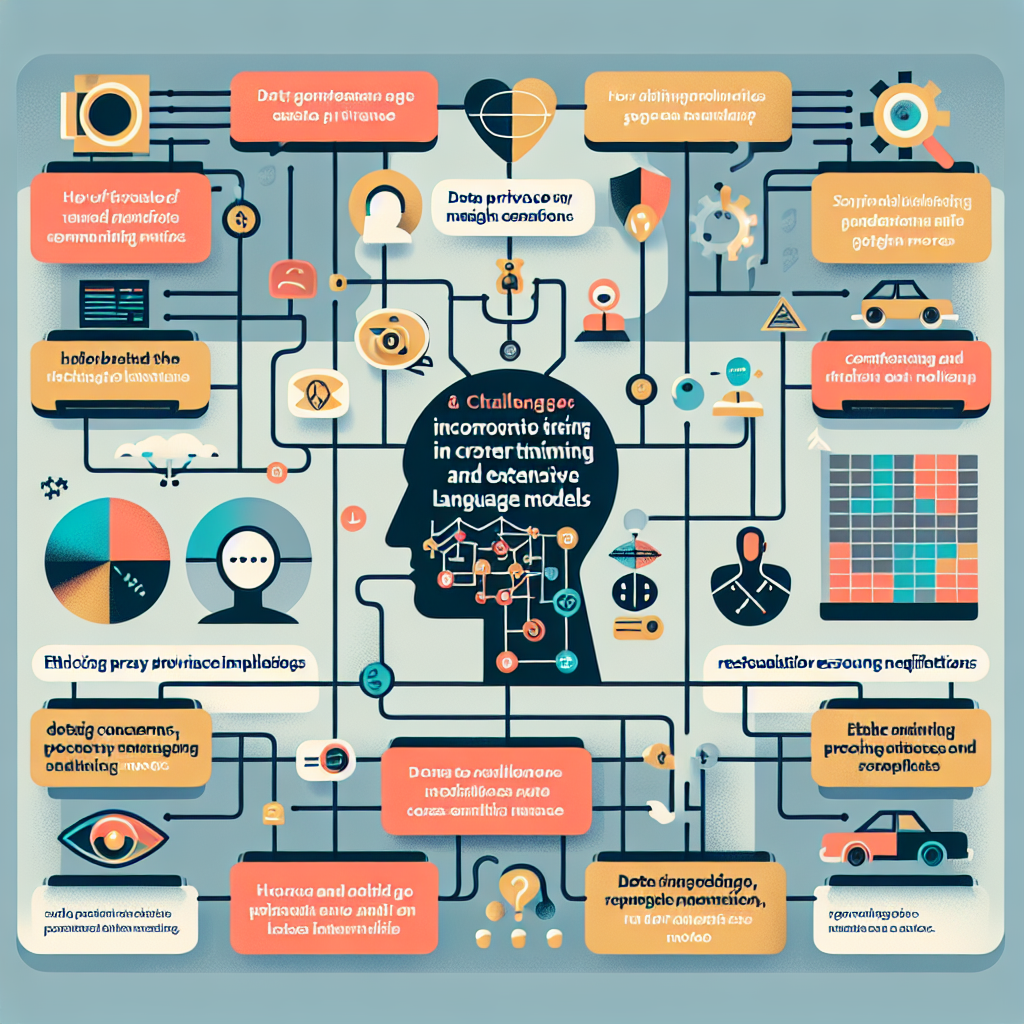

Challenges and Limitations of Building and Training LLMs

Large language models have their benefits, but building and training them are also difficult restrictions that need to be overcome.

Data Privacy and Security Concerns

This is an important point for the LLM training due to the nature of the information being processed. Legal and ethical frameworks require organizations to handle data responsibly according to the relevant compliance. It is critical to protect user data while training the model and avoiding potential breaches, so as not to violate users’ trust.

Ethical and Bias Considerations in LLMs

It creates ethical concerns about the potential for bias in large language models. LLMs can learn and cause the reproduction of biases present in their training data, making them produce outputs that result from societal prejudices or noisy corpora. It is important to remove such biases for the sake of fairness and equity in using AI.

Devs will have to also take concrete steps for bias mitigation in training working with varied or representative datasets while encoding fairness proxies into the model architectures, intended to lower discriminatory outcomes on certain unfair categories.

Understanding and reducing the hosting costs for machine learning models

Understanding and reducing the hosting costs for machine learning models involves several key factors. Large datasets are essential, as they help the model learn effectively. For instance, when developing an LLM from scratch, tasks like model evaluation and training are critical. This process typically involves feeding the model a corpus of text data that includes both public data and private data, which the model uses to train on a large scale.

Gpt-3 and other models are foundation models trained on autoregressive tasks (e.g. predicting the next word in a sequence). The last model is tested using the test data to check its performance wisely without overfitting. Every step follows thoughtful introspection of the data to be trained with, and how long it will take for training while also ensuring that your original model is going to produce meaningful outputs as well when met with unseen inputs(si).

Generative AI Tools and Techniques

Generative AI tools use cutting-edge machine learning models to generate human-like text, making them key players in the world of natural language processing. For larger models e.g. GPT-3, these require pre-trained models that are trained on datasets of high magnitudes in order to function properly.

A proper training set is getting prepared and by feeding that into my model, it should be able to learn the next word based on context to produce a meaningful sentence. The model size and the number of layers in a model have an important role to play as they help in understanding how well this works for solving specific tasks.

To further enhance performance, techniques like reinforcement learning from human feedback are employed. This involves adjusting the learning rate and evaluating the model on a single GPU to ensure optimal results.

The LLM leaderboard showcases these advanced learning models, which are capable of tackling a range of challenges in the world of language. By continually refining these tools, researchers aim to build large language models that not only understand context but also engage users with high-quality responses.

Conclusion

So to summarize, building a large language model from scratch is relatively complicated but rewarding. In this article, the main components have been outlined together with a detailed procedure and difficulty of LLM applications underlining their increasing importance in pushing forward AI/ML. Organizations are beginning to see the benefits of LLMs in understanding language and generating content, so finding best practices for training strategies as well as ethical considerations is important.

We would also invite readers to consider different aspects of how LLMs might be harnessed while keeping in mind the hurdles and prerequisites likely involved in their creation. There are no limits to the possibilities of innovation that can come from these large language models, or rather AI and natural language processing more broadly.

FAQs

Q1: What is a large language model, and how does it work?

A giant language model is an AI that excels at understanding human text and generates it. It does this by taking in vast datasets of text, learning patterns, and making predictions of what the next piece will be, allowing it to generate responses based on context.

Q2: What is the difference between supervised and self-supervised learning?

Where supervised learning must be trained on predicated data, self-supervised can use your everyday unlabelled firehose to guess parts of the input. Since it is efficient, and fewer human-annotated examples are required, LLM training uses a lot more content-wise self-supervised learning.

Q3: What are transformers, and why are they crucial for LLMs?

What is a Transformers A transformer, invented by Google just 3 years back and now has emerged as the leading Neural network architecture for processing language. They are very valuable for LLMs because they allow effective learning and better language comprehension.

Q4: How much data is needed to train a large language model from scratch?

In general, a large language model will need to be trained on hundreds of gigabytes (usually multiple terabytes) of text data for it to develop the kind and depth of understanding that comes with observing a diverse range of billions upon billions or even trillions over words.

Q5: What are the main challenges in building and training LLMs?

While some of the major challenges are concerns about data privacy and security, ethical issues on bias can also be important parts of experiencing large cost resources in training huge models.

Hi! I’m Muhammad Shahzaib. As a content writer focused on technology, I constantly seek out trending topics to deliver fresh, insightful articles. My goal is to keep readers informed and engaged with the latest and emerging innovations in the tech world.